The Resurgence of Analogue Computers: How They're Revolutionizing AI in the Digital Age

Analog computers are not just relics of the past, but powerful tools that can solve complex problems faster and more efficiently than digital computers.

In this blog post, you will learn about the history, principles, and applications of analog computers, from the ancient Antikythera mechanism to how they might just be a key to revolutionizing the development of modern artificial intelligence. You will also discover the advantages and disadvantages of analog computing, and how it can complement digital computing in the future.

Introduction

Analog computers are devices that use the continuous variation of physical quantities, such as electrical potential, fluid pressure, or mechanical motion, to model the problem being solved. In contrast, digital computers represent varying quantities symbolically and by discrete values of both time and amplitude.

History

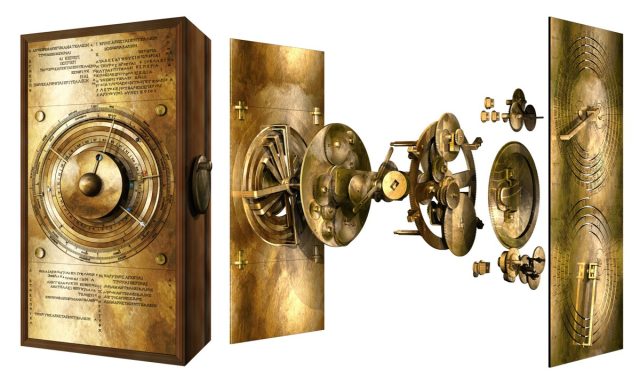

Analog computers have a long and fascinating history, dating back to ancient times. One of the earliest examples of an analog computer is the Antikythera mechanism, The Antikythera mechanism is a remarkable ancient device that could calculate and display various astronomical phenomena, such as the phases of the moon, the positions of the planets, the dates of eclipses, and even the dates of the Olympic games.

It consisted of a complex system of gears and dials that could be operated by a hand crank. It is considered the first known analog computer and a masterpiece of mechanical engineering. The mechanism was found in a shipwreck near the island of Antikythera in 1901 . The mechanism was made of bronze and had at least 30 gears. The mechanism was housed in a wooden box with inscriptions that explained its functions. The mechanism could also show the cycles of the ancient Greek calendars and the zodiac signs. The mechanism was probably designed and built by Greek scientists in the late 2nd or early 1st century BCE.

|

| The Antikythera mechanism as visualized in its entirety |

Another example of an early analog computer is the tide predictor, developed by William Thomson (later known as Lord Kelvin) in 1873. It used a system of pulleys and weights to simulate the effects of the gravitational forces of the Sun and Moon on the Earth's oceans. It could produce accurate predictions of the tide levels at various locations and times. It saved a week worth of tedious calculations that would have otherwise would have been done by hand of computing the wave functions of tides.

|

| The first tide predicting machine (TPM) was built in 1872 by the Légé Engineering Company |

In the late 19th and early 20th centuries, analog computers became more sophisticated and complex, with applications in science, engineering, and warfare. For instance, A.A. Michelson and S.W. Stratton built a harmonic analyzer in 1898, which could generate and combine sinusoidal motions to analyze periodic phenomena. Vannevar Bush, an American electrical engineer, invented the differential analyzer in the early 1930s, which used mechanical integrators to solve differential equations. This machine was used for various purposes, such as calculating artillery trajectories, studying electrical networks, and modeling weather patterns.

With the advent of electronic technology, analog computers evolved to use electrical components, such as operational amplifiers, resistors, capacitors, and diodes, to manipulate voltages and currents. These devices could perform a variety of mathematical operations, such as inversion, summation, differentiation, and integration, by connecting them in different configurations. Electronic analog computers were widely used in scientific and industrial applications until the 1950s and 1960s, when they started to be replaced by digital computers.

One of the main advantages of analog computers over digital computers is that they can operate much faster, since they do not need to convert continuous signals into discrete bits and perform arithmetic operations on them. Analog computers can also handle nonlinear problems more easily than digital computers, since they do not suffer from quantization errors or rounding errors.

|

| Fast Fourier Transform |

However, analog computers also have some drawbacks that limit their accuracy and reliability. For example, analog computers are susceptible to noise and interference from external sources, which can affect their output signals. Analog computers also have limited precision and resolution, since they depend on the quality and calibration of their components. Moreover, analog computers are not very flexible or scalable, since they require a different physical setup for each problem.

Analog Artificial intelligence?

Despite these limitations, analog computers may be making a comeback in the field of artificial intelligence (AI), especially for applications that involve processing large amounts of data or performing complex computations in real time. One of the reasons for this resurgence is that analog computers can mimic some aspects of biological neural networks more naturally than digital computers.

Neural networks are computational models inspired by the structure and function of the brain. They consist of interconnected units called neurons that process information by sending and receiving signals through weighted connections called synapses. Neural networks can learn from data and perform tasks such as classification, regression, clustering, generation, and reinforcement learning.

Digital computers can simulate neural networks by using software algorithms or specialized hardware devices called neuromorphic chips. However, these methods have some drawbacks that limit their performance and efficiency. For example, software simulations are slow and consume a lot of memory and power. Neuromorphic chips are faster and more energy-efficient than software simulations but still rely on discrete logic gates and transistors that introduce noise and errors.

Analog computers can offer an alternative way of implementing neural networks by using continuous signals and components that resemble biological neurons and synapses more closely. For example, some analog components can exhibit nonlinear behaviors such as saturation or hysteresis that are similar to those observed in biological neurons. Some analog components can also store information in their internal states or dynamics that are analogous to those of biological synapses.

One example of an analog computer that implements a neural network is the perceptron, proposed by Frank Rosenblatt in 1957. The perceptron is a device that can learn to classify linearly separable patterns by adjusting its weights according to a learning rule based on feedback from its output.

The perceptron consists of an array of photocells that receive light signals from an input pattern (such as an image), an array of potentiometers that act as adjustable weights (or synapses), an operational amplifier that acts as a thresholding unit (or neuron), and a light bulb that acts as an output signal (such as a binary decision).

The perceptron was one of the first attempts to create a machine that could learn from data and perform intelligent tasks. However, it was soon shown by Marvin Minsky and Seymour Papert in 1969 that the perceptron had some limitations, such as being unable to solve problems that are not linearly separable (such as the XOR problem). This led to a decline in interest and funding for neural network research until the 1980s, when new methods and architectures were developed to overcome these limitations.

|

| Perceptron as built by Frank Rosenblatt |

Avenues to explore

Today, analog computers are being explored again as a possible way of enhancing the performance and efficiency of neural networks, especially for applications that require high speed, low power, or large scale. Some examples of these applications are:

- Image processing and computer vision: Analog computers can process images faster and more efficiently than digital computers by using parallel processing and exploiting the spatial and temporal correlations in the image data. For example, analog computers can perform edge detection, feature extraction, segmentation, filtering, compression, and reconstruction of images by using optical or electrical components that operate on the intensity or frequency of light or voltage signals.

- Signal processing and communication: Analog computers can process signals faster and more efficiently than digital computers by using analog filters, modulators, demodulators, mixers, amplifiers, and oscillators that operate on the amplitude, phase, or frequency of voltage or current signals. For example, analog computers can perform noise reduction, signal enhancement, encryption, decryption, modulation, demodulation, encoding, decoding, compression, and decompression of signals by using analog components that manipulate the signals in their native domain.

- Machine learning and optimization: Analog computers can perform machine learning and optimization tasks faster and more efficiently than digital computers by using analog components that implement gradient descent, stochastic gradient descent, backpropagation, genetic algorithms, simulated annealing, or other optimization techniques that operate on continuous variables. For example, analog computers can perform supervised learning, unsupervised learning, reinforcement learning, or deep learning by using analog components that adjust their parameters according to a cost function or a reward function.

In Conclusion

Analog computers are devices that use continuous physical quantities to model the problem being solved. They have a long and fascinating history that spans from ancient times to modern times. They have some advantages over digital computers in terms of speed and nonlinearity but also some drawbacks in terms of accuracy and reliability. They may be making a comeback in the field of AI for applications that require high speed, low power, or large scale. They may also offer a new way of understanding and emulating biological neural networks.

.png)

Comments

Post a Comment